Brian Bartoldson Prospectus Defense

Department of Scientific Computing

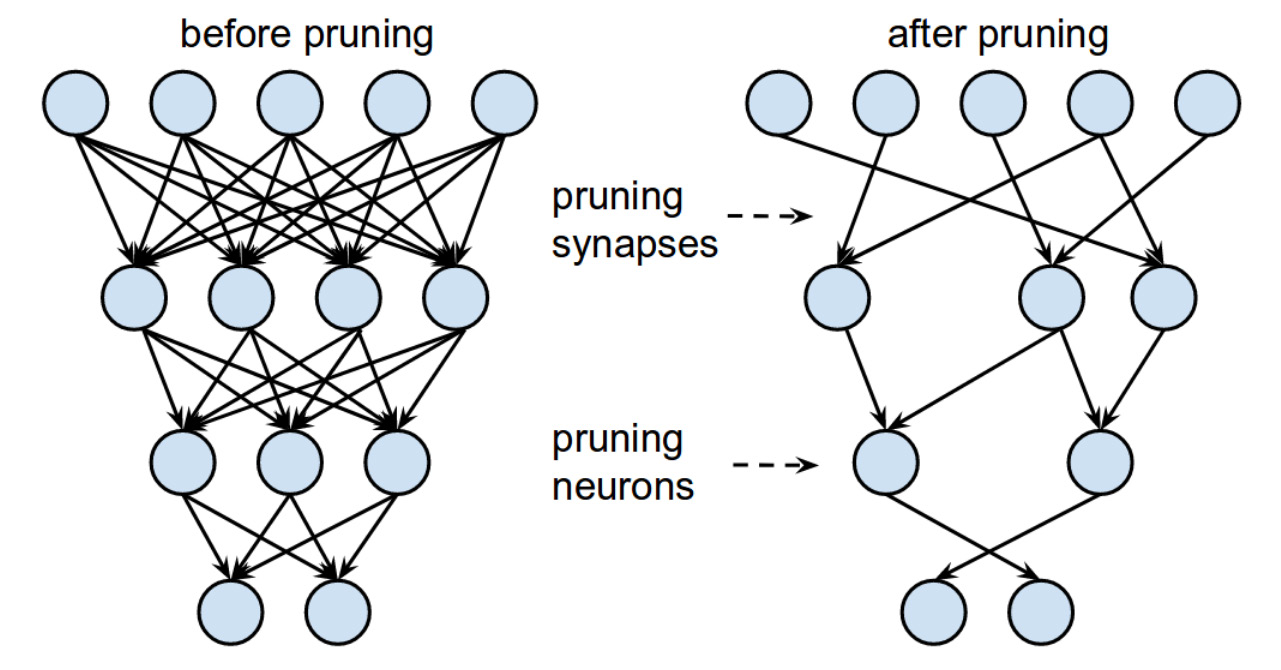

"Pruning Artificial Neural Network Weights"

01:00 P.M. Friday, April 27, 2018

499 Dirac Science Library

Abstract:

Parameter-pruning algorithms combat the computational and memory costs of artificial neural networks (NNs), with some algorithms capable of removing over 90% of connection weights without harming performance. Removing weights from an NN is also a form of regularization, which means that pruning can potentially improve an NN's performance on previously unseen data. Despite this, algorithms in the pruning literature do not report improvements in the pruned NN's ability to generalize to unseen data. Our preliminary experiments suggest that pruning NNs can lead to better generalization if pruning targets large instead of small weights. Applying our pruning algorithm to an NN leads to a higher image classification accuracy on CIFAR-10 test data (a proxy for unseen, general data) than applying the popular regularizer dropout. The pruning couples this higher accuracy with an 85% reduction of the NN's parameter count. This prospectus describes completed and suggested work aimed at understanding how to construct an NN pruning algorithm that facilitates compression and enhances the ability to generalize.

"Han et al. Learning both weights and connections for efficient neural network, NIPS 2015"

"Han et al. Learning both weights and connections for efficient neural network, NIPS 2015"